This comprehensive guide breaks down the electricity consumption of graphics cards into different categories, offering estimates for various GPU bit sizes, usage scenarios, and popular models from leading manufacturers like Intel, NVIDIA and AMD.

Table of Contents

Understanding how much electricity your graphics card consumes can provide valuable insights for managing energy costs and optimizing your system. Whether you’re using a GPU for intense gaming, everyday desktop tasks, or cryptocurrency mining, power consumption varies significantly depending on the card’s architecture, bit size, and usage scenario.

Similar to Hard Drive and Liquid CPU Cooler Electricity usage, graphics cards (GPUs) are essential components in modern computers, especially for gaming, video editing, and other graphically intensive tasks. However, their power consumption can vary significantly based on their architecture, bit size, and usage.

Understanding the Electricity Consumption of Graphics Cards

Here is the electricity consumption break down of graphics cards into different categories to help you understand how much electricity your GPU might use.

Factors Affecting GPU Power Consumption

Before diving into specific categories, it’s important to understand the main factors that affect a GPU’s power consumption:

- Architecture: Newer architectures are generally more power-efficient.

- Bit Size: Higher bit sizes typically indicate more data being processed, which can increase power consumption.

- Clock Speed: Higher clock speeds can lead to increased power usage.

- Usage Scenario: Gaming, mining, and idle usage all have different power consumption profiles.

1. Power Consumption by Bit Size

a. 128-Bit Graphics Cards

128-bit graphics cards are typically entry-level or mid-range cards, suitable for less demanding tasks such as casual gaming or general desktop use.

- Average Power Consumption: 50-75 watts

- Monthly Electricity Usage:

- 75 W1000×720 hours=54 kWh\frac{75 \text{ W}}{1000} \times 720 \text{ hours} = 54 \text{ kWh}100075 W×720 hours=54 kWh

b. 192-Bit Graphics Cards

192-bit graphics cards are mid-range to upper-mid-range cards, suitable for more demanding gaming and graphic design tasks.

- Average Power Consumption: 100-150 watts

- Monthly Electricity Usage:

- 150 W1000×720 hours=108 kWh\frac{150 \text{ W}}{1000} \times 720 \text{ hours} = 108 \text{ kWh}1000150 W×720 hours=108 kWh

c. 256-Bit Graphics Cards

256-bit graphics cards are typically high-end cards, capable of handling intensive gaming, VR, and professional graphic work.

- Average Power Consumption: 150-250 watts

- Monthly Electricity Usage:

- 250 W1000×720 hours=180 kWh\frac{250 \text{ W}}{1000} \times 720 \text{ hours} = 180 \text{ kWh}1000250 W×720 hours=180 kWh

d. 384-Bit Graphics Cards

384-bit graphics cards are among the most powerful consumer-grade cards, used for ultra-high-end gaming and professional tasks.

- Average Power Consumption: 200-350 watts

- Monthly Electricity Usage:

- 350 W1000×720 hours=252 kWh\frac{350 \text{ W}}{1000} \times 720 \text{ hours} = 252 \text{ kWh}1000350 W×720 hours=252 kWh

Power Consumption Bit Size Overview:

| Bit Size | Power Consumption (Watts) | Monthly Electricity Usage (kWh) |

|---|---|---|

| 128-Bit | 50-75 | 54 |

| 192-Bit | 100-150 | 108 |

| 256-Bit | 150-250 | 180 |

| 384-Bit | 200-350 | 252 |

What is bit in GPU? The bit-width of a GPU refers to the width of the memory bus. A higher memory bus width means that more data can be transferred between the memory and the graphics processor, running at the same speed as the lower bus width.

2. Power Consumption by Usage Scenario

a. Gaming

Gaming puts a significant load on the GPU, leading to higher power consumption. The average power usage during gaming varies depending on the complexity of the game and the GPU’s efficiency.

- Average Power Consumption: 150-300 watts

- Monthly Electricity Usage (assuming 4 hours of gaming per day):

- 300 W1000×120 hours=36 kWh\frac{300 \text{ W}}{1000} \times 120 \text{ hours} = 36 \text{ kWh}1000300 W×120 hours=36 kWh

b. Idle/Desktop Use

When idle or used for simple desktop tasks, the GPU’s power consumption is much lower.

- Average Power Consumption: 10-30 watts

- Monthly Electricity Usage:

- 30 W1000×720 hours=21.6 kWh\frac{30 \text{ W}}{1000} \times 720 \text{ hours} = 21.6 \text{ kWh}100030 W×720 hours=21.6 kWh

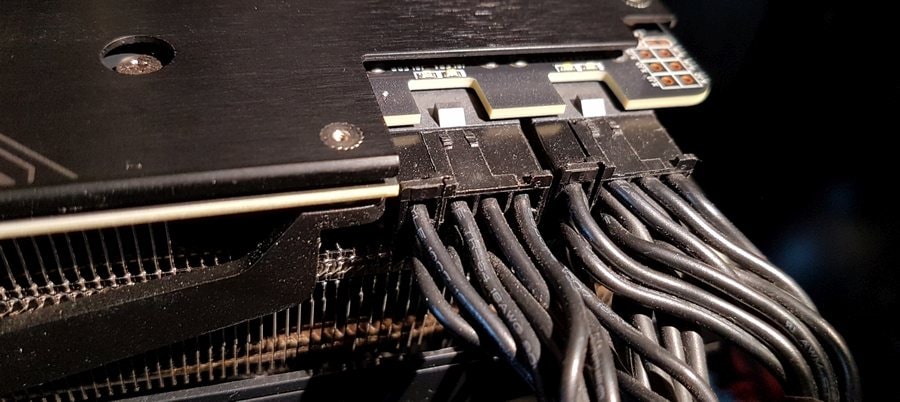

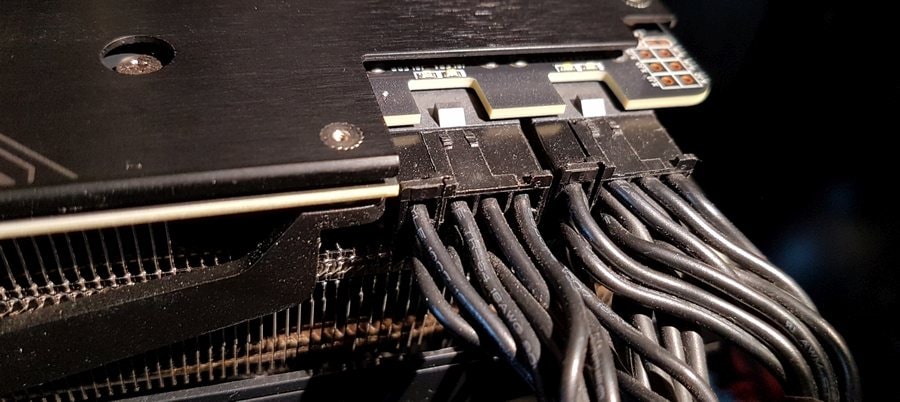

c. Cryptocurrency Mining

Cryptocurrency mining can be extremely demanding on GPUs, often running them at maximum capacity for extended periods.

- Average Power Consumption: 200-350 watts

- Monthly Electricity Usage:

- 350 W1000×720 hours=252 kWh\frac{350 \text{ W}}{1000} \times 720 \text{ hours} = 252 \text{ kWh}1000350 W×720 hours=252 kWh

Power Consumption Usage Scenario Overview:

| Usage Scenario | Power Consumption (Watts) | Monthly Electricity Usage (kWh) |

|---|---|---|

| Gaming (4 hrs/day) | 150-300 | 36 |

| Idle/Desktop | 10-30 | 21.6 |

| Cryptocurrency Mining | 200-350 | 252 |

3. Power Consumption by GPU Manufacturer and Model

a. NVIDIA

NVIDIA’s GPUs are known for their performance and efficiency. Here are some examples of power consumption for popular models:

- GTX 1660 Super: 125 watts

- Monthly Electricity Usage:

- 125 W1000×720 hours=90 kWh\frac{125 \text{ W}}{1000} \times 720 \text{ hours} = 90 \text{ kWh}1000125 W×720 hours=90 kWh

- Monthly Electricity Usage:

- RTX 3060: 170 watts

- Monthly Electricity Usage:

- 170 W1000×720 hours=122.4 kWh\frac{170 \text{ W}}{1000} \times 720 \text{ hours} = 122.4 \text{ kWh}1000170 W×720 hours=122.4 kWh

- Monthly Electricity Usage:

- RTX 3080: 320 watts

- Monthly Electricity Usage:

- 320 W1000×720 hours=230.4 kWh\frac{320 \text{ W}}{1000} \times 720 \text{ hours} = 230.4 \text{ kWh}1000320 W×720 hours=230.4 kWh

- Monthly Electricity Usage:

b. AMD

AMD’s GPUs are also popular, especially for their performance-to-price ratio. Here are some examples:

- Radeon RX 580: 185 watts

- Monthly Electricity Usage:

- 185 W1000×720 hours=133.2 kWh\frac{185 \text{ W}}{1000} \times 720 \text{ hours} = 133.2 \text{ kWh}1000185 W×720 hours=133.2 kWh

- Monthly Electricity Usage:

- Radeon RX 5700 XT: 225 watts

- Monthly Electricity Usage:

- 225 W1000×720 hours=162 kWh\frac{225 \text{ W}}{1000} \times 720 \text{ hours} = 162 \text{ kWh}1000225 W×720 hours=162 kWh

- Monthly Electricity Usage:

- Radeon RX 6800 XT: 300 watts

- Monthly Electricity Usage:

- 300 W1000×720 hours=216 kWh\frac{300 \text{ W}}{1000} \times 720 \text{ hours} = 216 \text{ kWh}1000300 W×720 hours=216 kWh

- Monthly Electricity Usage:

Power Consumption Popular Models Overview:

| Model | Power Consumption (Watts) | Monthly Electricity Usage (kWh) |

|---|---|---|

| NVIDIA GTX 1660 Super | 125 | 90 |

| AMD Radeon RX 580 | 185 | 133.2 |

| NVIDIA RTX 3080 | 320 | 230.4 |

| AMD Radeon RX 6800 XT | 300 | 216 |

Conclusion

The power usage of GPUs varies widely depending on their bit size, usage scenario, and specific model. Here’s a summary of the estimated monthly electricity consumption for different categories:

- 128-Bit Graphics Cards: Average consumption of 50-75 watts translates to about 54 kWh per month.

- 192-Bit Graphics Cards: Average consumption of 100-150 watts results in approximately 108 kWh per month.

- 256-Bit Graphics Cards: With an average of 150-250 watts, expect around 180 kWh per month.

- 384-Bit Graphics Cards: High-end models consuming 200-350 watts can use up to 252 kWh per month.

For specific usage scenarios:

- Gaming: Graphics cards may consume between 150-300 watts, leading to 36 kWh if gaming for 4 hours daily.

- Idle/Desktop Use: Lower power consumption of 10-30 watts results in about 21.6 kWh per month.

- Cryptocurrency Mining: High-demand mining operations can lead to a consumption of up to 252 kWh per month.

Examples from popular models:

- NVIDIA GTX 1660 Super: 125 watts, resulting in 90 kWh monthly.

- AMD Radeon RX 580: 185 watts, which equates to 133.2 kWh monthly.

By analyzing these figures, you can better estimate your graphics card’s impact on your electricity bill and make informed decisions about your hardware usage. Whether you’re upgrading your system or looking to optimize your current setup, these insights can help you manage energy consumption more effectively.

Actual power consumption can vary based on factors such as system configuration, workload, and ambient temperature. Always refer to your GPU’s specifications and use monitoring tools to get precise measurements for your specific setup.